We've all seen projects where seemingly sensible recommendations fail to survive contact with reality. Sometimes this is outside anyone's control – we're all at the mercy of events – but it's also much more likely when there is a gap in the supporting evidence and / or analysis.

A good discipline when you're formulating recommendations is therefore to run an exercise that explicitly surfaces and explores the different ways things could go wrong.

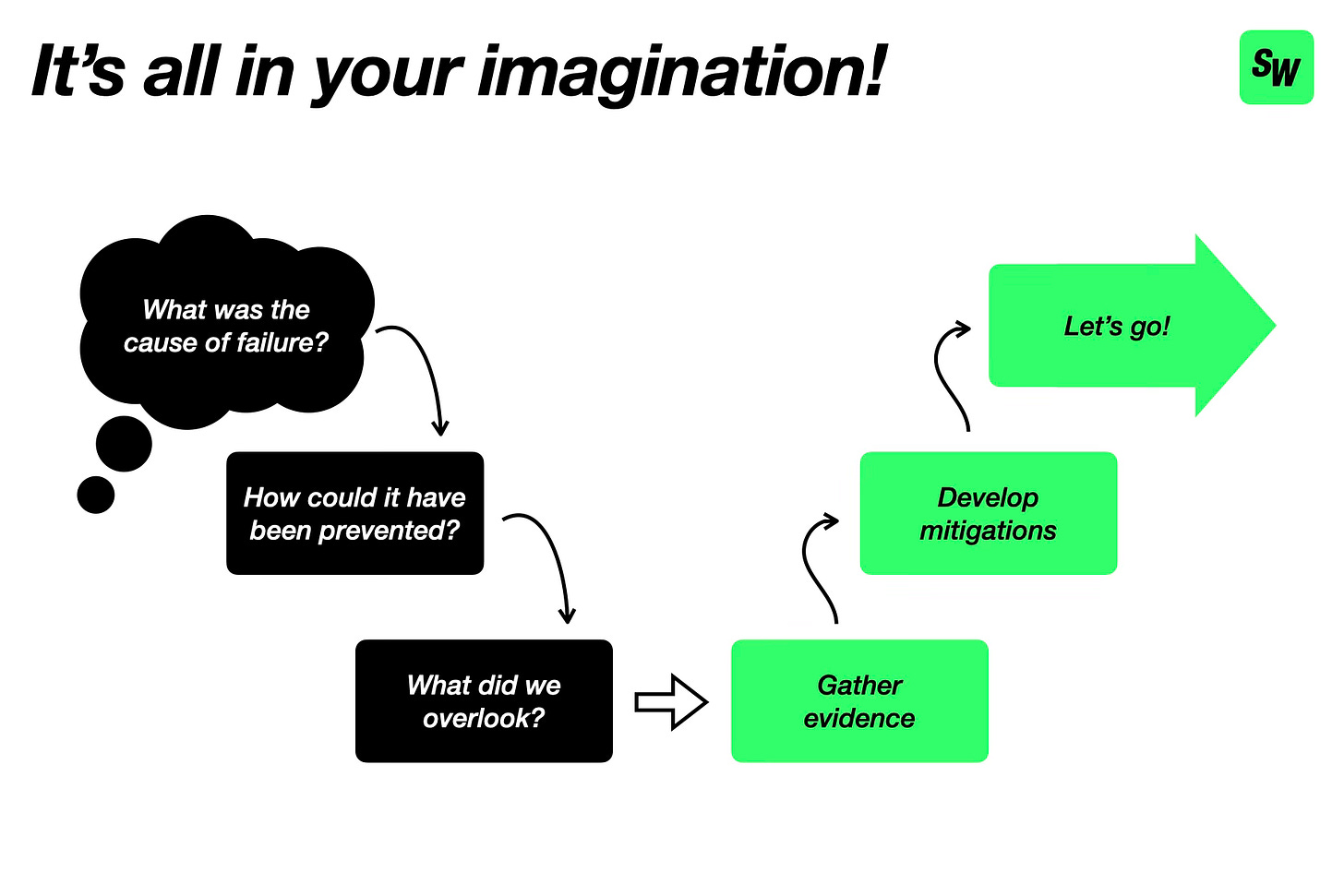

Start by imagining a future where your recommendation has failed spectacularly, and then for each scenario that comes to mind ask yourself:

What was the cause of failure?

What actions could we have taken to prevent this?

What evidence to support these actions did we miss or ignore?

This should help identify gaps and weaknesses in the analysis that underpins your recommendation. Armed with these insights you can do the work to close the gaps, update your understanding of the problem space and refine your analysis and recommendation accordingly.

To test things even further, make a list of the key assumptions embedded in your analysis (some of which you may have stated in the course of the project, others you may have taken as read). For each assumption, try reversing it and then thinking through how this would impact your analysis / recommendation if true. This is a great way to probe for weaknesses that you might not otherwise anticipate.

Reversing assumptions can also double up as a technique for reframing problems and generating creative or counterintuitive solutions. More on that another time!